Google unused mechanical technology AI can run without the cloud and still tie your shoes.

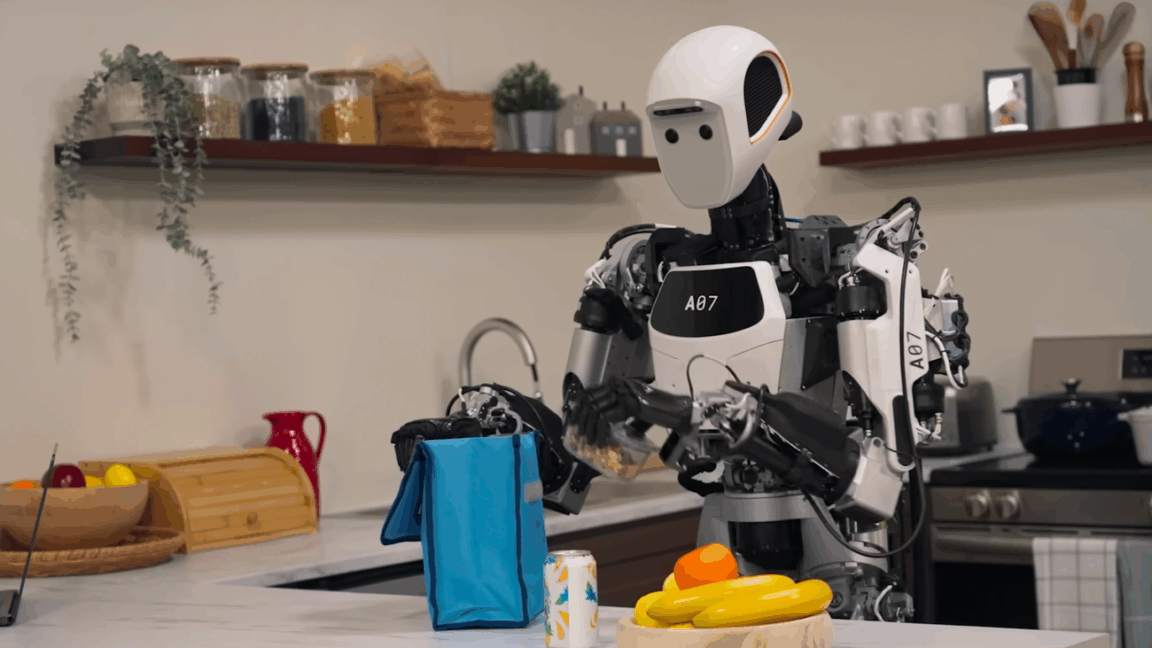

We in some cases call chatbots like Gemini and Chat GPT "robots," but generative AI is additionally playing a developing part in genuine, physical robots. After reporting Gemini Mechanical technology prior this year, Google Deep Mind has presently uncovered a modern on-device VLA (vision dialect activity) show to control robots. Not at all like the past discharge, there's no cloud component, permitting robots to function with full independence.

Carolina Parada, head of mechanical autonomy at Google Deep Mind, says this approach to AI mechanical autonomy could make robots more reliable in challenging circumstances. This is often moreover the primary form of Google's mechanical autonomy demonstrate that engineers can tune for their specific uses. Mechanical technology could be a special issue for AI since, not as it were does the robot exist within the physical world, but it moreover changes its environment.

Whether you're having it move squares around or tie your shoes, it's difficult to anticipate each projection a robot might experience. The conventional approach of preparing a robot on activity with support was exceptionally moderate, but generative AI permits for much more noteworthy generalization. "It's drawing from Gemini's multimodal world understanding in order to do a totally modern task," explains Carolina Parada.

What that empowers is in that same way Gemini can create content, type in verse, fair summarize a piece, of you'll be able moreover type in code, and you'll also generate pictures. It moreover can create robot activities." Common robots, no cloud required Within the past Gemini Mechanical technology discharge (which is still the "most" excellent form of Google's mechanical technology tech),

the stages ran a half breed framework with a little show on the robot and a bigger one running within the cloud. You've likely observed chatbots "think" for quantifiable seconds as they create an yield, but robots ought to respond rapidly. In case you tell the robot to choose up and move an question, you do not need it to delay whereas each step is created. The neighborhood show permits speedy adjustment, whereas the server-based show can offer assistance with complex thinking assignments.

Google Deep Mind is presently unleashing the nearby show as a standalone VLA, and it's shockingly vigorous.

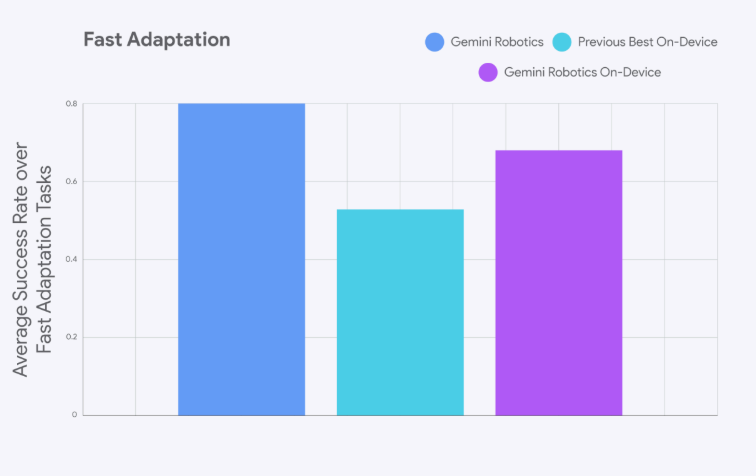

The unused Gemini Mechanical technology On-Device demonstrate is as it were a small less precise than the half breed adaptation.

Concurring to Parada, numerous errands will work out of the box. "When we play with the robots, we see that they're shockingly able of understanding a modern circumstance,"

Parada tells Ars. By discharging this demonstrate with a full SDK, the group trusts engineers will deliver Gemini-powered robots unused errands and appear them modern situations, which seem uncover activities that do not work with the model's stock tuning.

With the SDK, mechanical technology analysts will be able to adjust the VLA to modern errands with as small as 50 to 100 exhibits.

A "show" in AI mechanical autonomy may be a bit diverse from other ranges of AI investigate. Parada clarifies that exhibits ordinarily include tele-operating the robot—controlling the apparatus physically to total a errand tunes the demonstrate to handle that errand independently. Whereas manufactured information is an component of Google's preparing, it's not a substitute for the real thing. "

We still discover that within the most complex, handy behaviors, we need real data," says Parada. "But there's very a parcel that you simply can do with reenactment." But those profoundly complex behaviors may be past the capabilities of the on-device VLA.

It ought to have no issue with direct activities like tying a shoe (a customarily troublesome errand for AI robots) or collapsing a shirt. In case, be that as it may, you needed a robot to create you a sandwich, it would likely require a more effective demonstrate to go through the multi-step thinking required to urge the bread within the right put.

The group sees Gemini Mechanical autonomy On-Device as perfect for situations where network to the cloud is spotty or non-existent. Handling the robot's visual information locally is additionally superior for protection, for illustration, in a wellbeing care environment. Building secure robots Security is continuously a concern with AI frameworks, whether it's a chatbot that gives unsafe data or a robot that goes Eliminator.

We've all seen generative AI chatbots and picture generators daydream misrepresentations in their yields, and the generative frameworks fueling Gemini Mechanical technology are no different—the demonstrate doesn't get it right every time, but giving the demonstrate a physical encapsulation with cold, coldblooded metal graspers makes the issue a little more thorny.

To guarantee robots carry on securely, Gemini Mechanical autonomy employments a multi-layered approach. "With the total Gemini Mechanical technology, you're interfacing to a model that's reasoning approximately what is secure to do, period," says Parada. "And after that you've got it conversation to a VLA that really produces alternatives, and after that that VLA calls a low-level controller, which ordinarily has safety-critical components, like how much constrain you'll be able move or how quick you'll move this arm.

Importantly, the new on-device model is just a VLA, so developers will be on their own to build in safety. Google suggests they replicate what the Gemini team has done, though. It's recommended that developers in the early tester program connect the system to the standard Gemini Live API, which includes a safety layer. They should also implement a low-level controller for critical safety checks.

Anyone interested in testing Gemini Robotics On-Device should apply for access to Google's trusted tester program. Google's Carolina Parada says there have been a lot of robotics breakthroughs in the past three years, and this is just the beginning—the current release of Gemini Robotics is still based on Gemini 2.0.

Parada notes that the Gemini Robotics team typically trails behind

Gemini development by one version, and Gemini 2.5 has been cited as a massive improvement in chat bot functionality. Maybe the same will be true of robots.

Tags:

Robotics AI

Artificial Intelligence

AI Without Cloud

Tech Innovation

Robotics

Future Tech

Machine Learning

AI Revolution

Smart Robots

Tech News

Cloud Computing

Robotics Tech

Google AI

AIin Robotics

https://www.aitechgadget.com/2025/06/google-unused-mechanical-technology-ai.html

0 Comments